< See latest news & posts

Introducing Netsy: An Open Source etcd alternative for Kubernetes that stores data in S3

TL;DR

- Netsy is a new Apache 2.0 licensed etcd alternative for Kubernetes which stores cluster state in S3.

- The goal is to scale from single-node to large multi-node clusters without having to migrate solutions to scale up or down.

- It uses S3 for durable writes and a local SQLite cache for reads.

- Single-node support is available now, and multi-node with PostgreSQL-inspired synchronous replication is expected to land next month.

- Please support us by starring the Netsy GitHub repository!

Today, we’re excited to introduce Netsy, our new Open Source etcd alternative for Kubernetes that stores data in S3, which is being released under the Apache 2.0 license.

Netsy is available on GitHub today and currently supports single-node clusters, with multi-node support expected to land next month, using a durable replication model inspired by PostgreSQL synchronous replication.

If you want to see a cluster backed by Netsy in action, you can launch a single-node Platform-as-a-Service in your own AWS account in under 90 seconds with Nadrama.

If you’re interested to learn more about why we built Netsy, and understand the journey we went on to get to the current solution, please read on!

The challenge with current solutions

In early 2024, I was considering startup ideas in the infrastructure and dev tools space, and exploring how my knowledge and experience with Cloudflare Workers could potentially enable new architectures and possibilities for users.

At the same time, I’m a huge fan of the Go programming language, containers, and the developer experience Kubernetes provides for container orchestration.

I previously ran the DevTools team at Cloudflare, multiple DevOps enablement teams at Xero, and at my last job was a startup CTO role where I migrated aging infrastructure to EKS - and reduced infrastructure costs by around 30%.

Kubernetes is great for developers, but the infra takes time and money

Having worked with many developers using Kubernetes at my last few roles, it was clear to me that using Kubernetes was broadly well received, but setting up and operating clusters and all of the components which go on top (like CNI/networking, ingress controllers, and so on) involved a non-trivial baseline cost and complexity, which provided barriers for smaller teams and solo developers looking for a container platform.

The average cost of a managed Kubernetes service can be quite high for smaller use cases, with most major clouds charging $73/month just for the control plane alone. This is understandable given the complexity of managing etcd and the fact that it should be run with a minimum of three nodes. But hobbyist devs looking for something which could reasonably run on a single server do not want to be spending $73/month just for a control plane.

You shouldn’t have to choose different tech for different cluster sizes

There are some fantastic projects which exist to make single-node clusters for Kubernetes viable, such as k3s which uses the kine etcd alternative. However, these solutions face challenges with scaling up. With the solutions on offer it seemed like a choice between options for small clusters and options for large clusters - there wasn’t a single solution that scales down to zero, and scales up to enterprise level.

After talking with a bunch of developers, it became clear that many wanted the benefits of Kubernetes and the Cloud Native / Open Source ecosystem, but given the cost and challenges, opted for proprietary solutions “in the interim”, knowing if and when they scale-up they’ll migrate to Kubernetes. Some folks refer to this as “the graduation problem”.

To me, this was frustrating. Kubernetes is great, the Open Source ecosystem around it is fantastic, why not make it possible to scale down to zero, and scale up to enterprise, with a single solution? The main constraint here seemed to be etcd and alternatives. So began the path of building what has now become Netsy.

The Evolution of Netsy

The First Attempt: Proxy Everything to Serverless

The first iteration of Netsy aimed to offload as much of the Kubernetes control plane as possible onto Cloudflare Workers using D1 for data storage and Durable Objects with Hibernated Websockets for coordination. The idea here was that it would minimise the hardware requirements for nodes, and offload some of the work to an efficient and scalable serverless platform. After some experimentation, this landed only offloading etcd.

After attempting to implement gRPC in TypeScript to enable kube-apiserver to connect directly to the Worker, I ended up building a custom gRPC reverse proxy using Go, which forwarded requests from the kube-apiserver to a Cloudflare Worker. This approach worked, but it introduced complexities around managing and coalescing etcd ‘watches’, and the request volumes appeared infeasible when put into production (millions of reads!).

Adding in a local SQLite read cache

In the next iteration, I implemented a local SQLite database on each node, which significantly reduced the number of reads going back to the Cloudflare Worker and made the architecture feasible. All writes from any node would go to the Worker, which would then replicate out to all nodes via websockets.

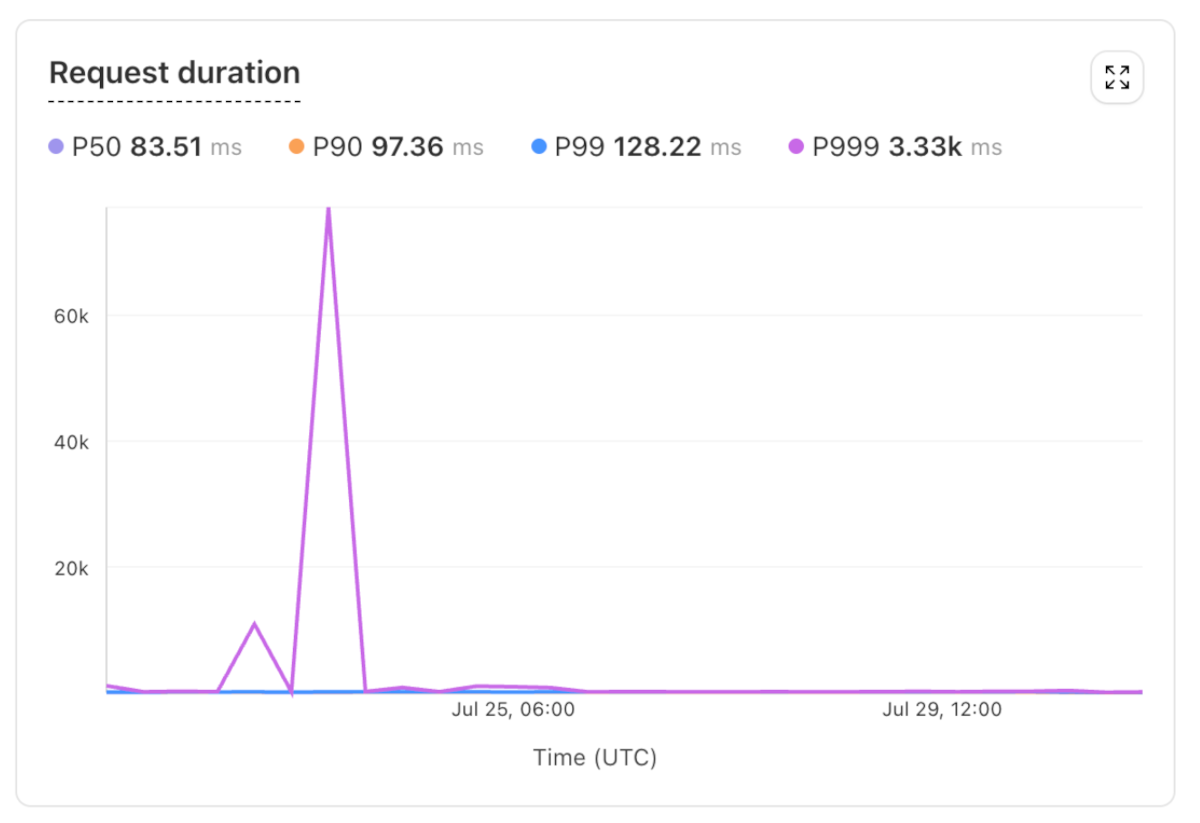

In development this worked flawlessly, and in initial production tests it worked great - however, during heavier testing prior to launch, p999 write latency became problematic.

This wasn’t an inherent problem with the technology stack so much as the design - when you’re sending requests over the internet with latency-sensitive applications, you need to expect outliers like this.

In hindsight this is all obvious, but hindsight is 20/20, as they say. At the time it was about ideating and experimenting to see what was possible - we wouldn’t be where we are today without this.

Feedback & Learnings

I did demos in San Francisco and Austin in late 2024 of the early implementation. Two bits of feedback stood out - one was that there were some concerns with availability during outages, which the read cache only partially mitigated, and the other was more around data sovereignty and organisational requirements to keep cluster state data within the same cloud provider account and region.

The goal with Nadrama is to run clusters across all major cloud providers, and possibly support on-prem one day as well. Initially I thought having etcd up in a global cloud would mean you could run a single cluster across clouds - though later realised it would make more sense to implement independent clusters with connectivity via solutions like wireguard.

Current Design: S3 + SQLite

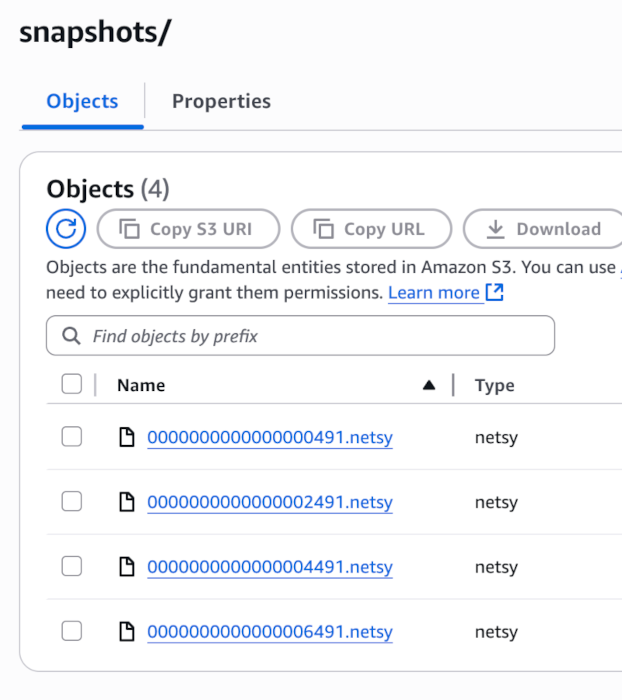

With all of the feedback and learnings, it led to the current design: Netsy now uses S3 for synchronous writes and maintains a local SQLite database for reads.

This approach ensures durability, reduces costs, and offers flexibility since any S3-compatible storage can be used.

Note that we use VPC endpoints to keep traffic to S3 in-network and reduce latency. For single node clusters, this works with acceptable latency.

What’s Next for Netsy?

Netsy is now live and ready for single-node clusters. The next step is multi-node support, which we’ve completed the design document for and expect to have implemented next month.

The design for multi-node is inspired by PostgreSQL’s synchronous replication model, with leader election handled by an ‘elector’ primary node which is assigned out-of-band.

In a Nadrama cluster, assigning the elector role is actually handled by a separate component which uses Cloudflare Workers and Durable Objects for coordination, but in future it could also be another Open Source component.

If you want to learn more about the Netsy multi-node design, check out our system design doc in the Netsy GitHub repo - your feedback is welcome!

The aim is for Netsy to enable scaling seamlessly from zero nodes to large multi-node clusters, without the tradeoffs or complexities of current solutions.

It’s been an interesting journey, and we’re excited for what’s next.

Stay tuned for more updates - and please show your support by starring the Netsy GitHub repository. Thanks for reading!

Published: .